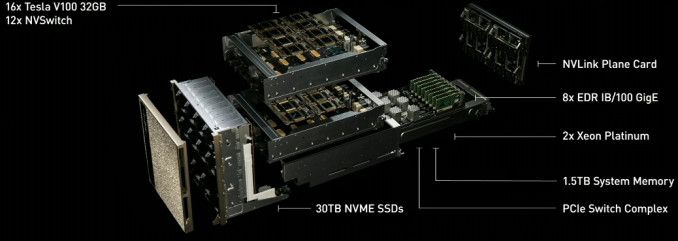

The supercomputer introduced by NVIDIA, the DGX-2, builds on the previous DGX-1 in several ways but with double the performance at an exorbitant price. First, it enters the new NVIDIA NVSwitch, which enables 300GB/sec chip-to-chip communication at 12x the speed of a PCIe connection. That, with NVLink2, allows sixteen GPUs to be grouped into a single system, bringing the total bandwidth to over 14 TB/s. Adding a couple of Xeon CPUs, 1.5 TB of RAM and 30 TB of NVMe storage capacity, you get a system that consumes 10 kW, weighs 350 lbs, but efficiently delivers twice the performance of the DGX-1, according to NVIDIA.

DGX-2 is two times more powerful than DGX-1

NVIDIA is also taking advantage of the 2 PFLOPs of performance when using the tensor cores.

The green company has used a double-battery system. The concept photo indicates that there are 12 NVSwitches (216 ports) in the system to maximize the amount of bandwidth available between GPUs. With six ports per Tesla V100 GPU, each running on 32GB of HBM2 memory, Tesla alone would take up 96 of those ports if NVIDIA had them fully wired to maximize the bandwidth of each GPU.

The design of the DGX-2 means that all 16 GPUs can share memory in a unified way, but with the usual advantages and disadvantages of abandoning the chip. Unlike the increased memory capacity of the Tesla V100, one of NVIDIA’s goals, in this case, is to create a system capable of keeping the workloads in memory that would be too large for an 8 GPU cluster.

The DGX-2 is being launched for those companies that are focused on deep learning and can make a considerable investment. The price of the system is $400,000, instead of $150,000 for the original DGX-1.