Nvidia presented a neural network that transforms sketches into photorealistic scenes.

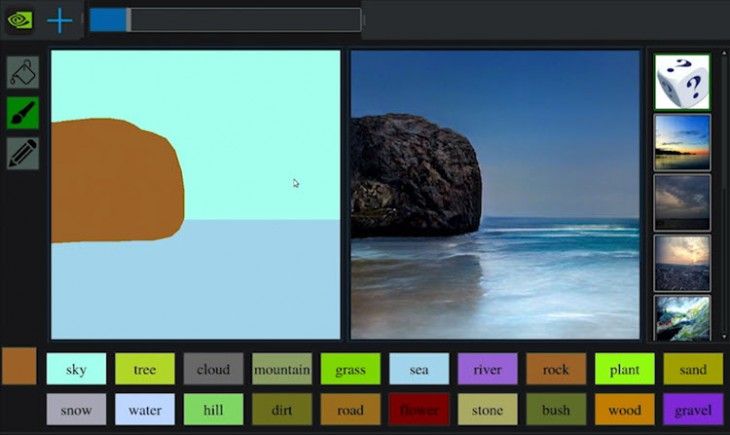

GauGAN, this AI-based system was trained with Flickr photos by analyzing millions of objects and establishing relationships between them to interpret them in context. This makes it possible to achieve a scene dynamic as we see it in the image:

The user only needs to draw a sketch for the AI to interpret the scene and complete the image with the appropriate details for the specified context. Or, to put it simply, it’s like the AI is an intelligent brush that paints a coloring book in detail.

And of course this dynamic opens up a world of possibilities for creative minds:

GauGAN could provide a powerful tool for creating virtual worlds for everyone, from architects and urban planners to landscape designers and game developers. With artificial intelligence that understands what the real world looks like, these professionals could create better prototypes of ideas and make rapid changes to a synthetic scene.

We can see a demonstration in this video:

GauGAN has 3 tools (pencil, pencil and paint bucket) and a set of objects that the designer can select for his drawing. And depending on which choice you make or which style you use, the AI creates a unique scenario, even if two users create the same sketch.

Although some details in the dynamics of this neural network still need to be worked out, the results are amazing. We can see all the technical data in this Nvidia report.