There are many current applications for converting 3D objects into a 2D perspective, but there are very few that we have at hand that can do this work in reverse. In short, if you want an object to be 3D, you need to render it in 3D. Once the hard work is done, the conversion to a 2D image is a pretty simple process. Well, probably not that easy, but you have the idea. Nvidia plans to change this with an algorithm that makes it easy to convert a 2D image to 3D.

Nvidia has introduced an AI algorithm that can convert 2D images to 3D

Nvidia has just announced that a new AI being developed has been able to successfully create multiple 3D images from a unique static 2D image.

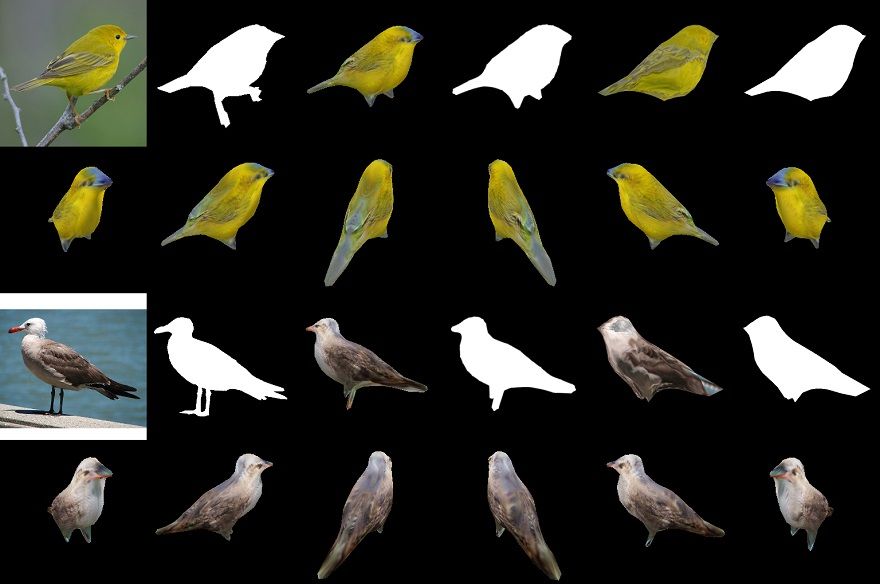

With the help of bird images, the IA was able to successfully replicate the images from different angles. In addition, it was also impressively possible to replicate the various textures.

What does Nvidia have to say?

“In traditional computer graphics, a pipeline renders a 3D model onto a 2D screen. But there is information that can be obtained by doing the opposite. For example, a model that could derive a 3D object from a 2D image could better track the object.

NVIDIA researchers wanted to develop an architecture that could do this by seamlessly integrating with the machine’s learning techniques. The result, DIB-R, generates high fidelity rendering with an encoder-decoder architecture. A kind of neural network that converts the input into a feature map or vector used to predict specific information. Such as the shape, color, texture, and illumination of an image.

It is particularly useful in areas such as robotics. For an autonomous robot to interact safely and effectively with its environment, it must be able to feel and understand its environment. DIB-R could potentially improve this depth perception capability.

The main objective of this new differentiable interpolation-based renderer (DIB-R) technology means that a process that used to take weeks of AI algorithms to be “trained” can now essentially learn “depth perception” on any object in milliseconds.

In the future, applying this algorithm could save a lot of work in modeling real objects in any application, be it in designing or creating video games, to name just two of the first features that come to mind.

For more information, visit the official Nvidia blog.