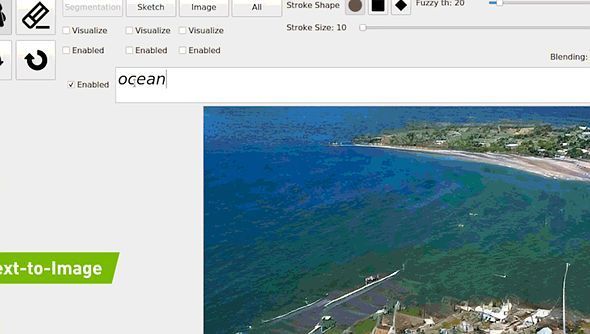

NVIDIA GauGAN2 AI can now use simple written phrases to generate corresponding realistic images. The deep learning model is able to generate different scenes using just three or four words. Nvidia has announced the latest version of GauGAN2, the AI-driven painting demonstration that can convert text into accurate images.

NVIDIA GauGAN2, which creates accurate images from text.

GauGAN2 relies on Deep Learning to perform this action with super-accurate images.

While the original version could only convert a sketch into a detailed image, GauGAN2 can generate images from sentences like “sunset on the beach.” The function of creating high-quality images from a drawing or sketch is still present and has even been improved, but the great innovation is the possibility of adding text to these images.

GauGAN can work this way thanks to generative adversarial networks (GANs), which you can learn more about in this article from Nvidia.

Nvidia says, “With the press of a button, users can create a segmentation map, a higher-level schema that shows the position of objects in the scene. From there, they can move on to drawing, touching up the scene with sketches using labels like sky, tree, rock, and river, allowing the smart brush to turn those doodles into stunning images.”

With the addition of text-to-image conversion features, the new version of GauGAN is more customizable. The latest version is also one of the first AI models to incorporate multiple modalities – text, semantic segmentation, sketch, and style – into a single GAN network.

Nvidia adds, “It’s an iterative process where each word the user enters into the text box is added to the image created by the AI.

Of course, it’s not as accurate as creating an image from sketches, but it’s useful for creating a “random” image with a description of what we actually want to see.

You can download it from the following link.