Automated facial recognition raises questions concerning the protection of human rights. Privacy and freedom of expression are also affected. While the development of artificial intelligence and self-learning machines is progressing, it is important to reconcile public security with our democratic freedoms. Therefore, Microsoft now demands a legal framework for face recognition.

In a blog article, Microsoft takes part in the discussion about history recognition and the technical possibilities. The head of the legal department, Brad Smith, wishes to speak. In his capacity as “Chief Legal Officer”, he points out that every technology and every tool could have a good or a bad use. You could sweep the yard with a broom or kill someone. The larger the technology, the larger the potential user or potential harm executes Microsoft chief attorney Brad Smith.

Face recognition technology raises questions concerning the fundamental protection of human rights such as privacy and freedom of expression. These problems increase the responsibility for technology companies that manufacture these products. In his opinion, they also call for well-thought-out state regulation and the development of standards for acceptable uses. In a democratic republic, there is no substitute for the decision-making of elected representatives on issues requiring a balance between public security and the essence of our democratic freedoms. Face recognition will force both the public and private sectors to take a stand and act accordingly.

Facial recognition technology raises issues that go to the heart of human rights protections, like privacy and freedom of expression. As AI advances, it’s important to balance public safety with our democratic freedoms. https://t.co/LR0sGteHfZ

— Brad Smith (@BradSmi) July 13, 2018

However, the question remains whether Microsoft does not simply want to shirk its responsibilities by calling for state regulation. In any case, it should not be enough to look to China. There, under the autocratic leadership of one party, the state establishes a technical dystopia with total visual surveillance. People who cross the street in red are pilloried by their neighbors. A social scoring system assesses every citizen and sanctions misconduct.

A mouse clicks away from the approval of the new basic data protection regulation, Facebook obtains the OK for history recognition, while Amazon sells its history recognition to police and military as well as government services in the USA.

But “us” is also under surveillance. In the wake of the G20 in Hamburg, police are evaluating voluntarily submitted mobile phone videos with face recognition software – in search of criminals.

In Berlin, for example, the Ministry of the Interior is “testing” the surveillance of the Ostbahnhof.

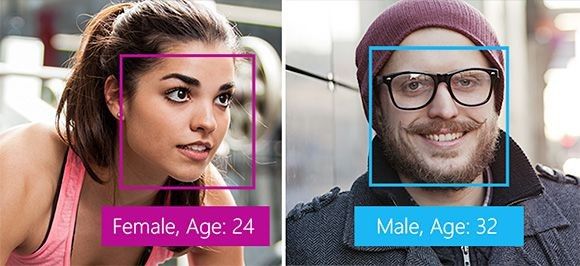

Face recognition is more than the iPhone camera’s focus on the selfie smile. The computer has long since recognized the mood of what was recorded, can determine sex and age alarmingly reliably and create moving images of people moving through the monitored room.