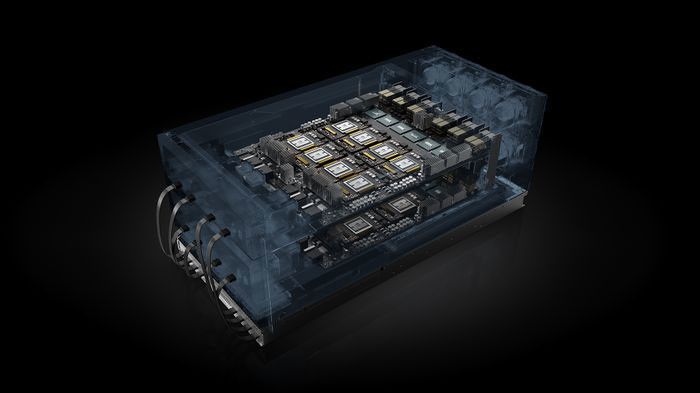

Nvidia HGX-2 is a cloud server platform equipped with 16 GV100 chips using NVSwitch interconnects, which work together offering half a terabyte of memory and two petaflops of computing power, spectacular figures only within the reach of the green giant.

Nvidia HGX-2 is the first platform to merge HPC and artificial intelligence

According to a statement from Nvidia, the Nvidia HGX-2 can process 15,500 images per second in the ResNet-50 training reference test, and can replace up to 300 CPU-based servers, saving significant money. Server manufacturers such as Lenovo and other manufacturers such as Foxconn plan to launch the first HGX-2-based systems on the market later this year. Hardware and software enhancements to Nvidia’s deep learning computing platform have led to a 10-fold increase in performance of deep learning workloads within six months.

A recent OpenAI study found that the computing power needed to enable well-known systems has doubled once every 3.5 months since 2012. This means that Nvidia GPUs are increasingly being used for training and displaying unlabeled data such as photos and videos.

“The CPU scale has slowed down at a time when the demand for computing is skyrocketing. The Nvidia HGX-2 with Tensor Core provides the industry with a powerful and versatile computing platform that merges HPC and AI to solve the world’s greatest challenges.

The introduction of the Nvidia HGX-2 follows the release of the HGX-1 last year, which runs on eight GPUs. The HGX-1 reference architecture has been used on GPU servers like Facebook’s Big Basin and Microsoft’s Project Olympus that they want.