Intel is planning an MCM design with 4 dies interconnected by EMIB for its upcoming Xeon Sapphire Rapids, which are expected to launch later this year. Several images of the design diagram for these Intel Xeon processors have been leaked.

It looks like the MCM architecture will make its way into processors rather than graphics cards, as Intel Sapphire Rapids could be ahead of AMD RDNA3 or NVIDIA Hopper. The leak of these charts has turned Twitter on its head, with everyone commenting on the news and us spreading the word.

Intel Sapphire Rapids, the Xeon with MCM design

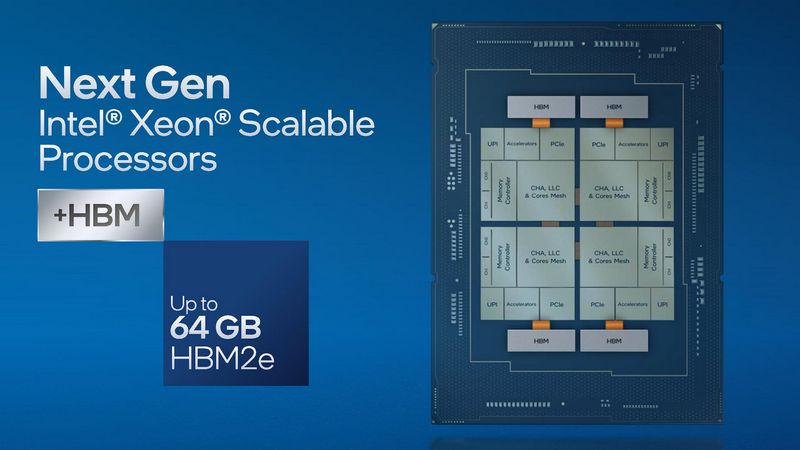

The Intel Xeon Scalable Sapphire Rapids processors will be the brand’s next bet for data centers, servers, and the business world. They are in the news after being leaked by Locuza, a Twitter user who posted 4 diagrams or images of the Dies. We have 4 Dies in an MCM design, bringing a full multi-core processor to life: cores, integrated northbridge, memory interfaces, PCIe, and other I/O connections. The 4 dies are interconnected via 5 EMIB bridges so that the cores of one die can seamlessly access I/O and the memory can control the other dies.

In several media we read the same thing: reduce interconnect latency and increase bandwidth by microscopically dense wiring between silicon bridges. In each Die, we see a similar approach to previous generations: Mesh interconnects and multiple IP blocks on a grid-connected by a network of ring buses.

So each die has 15 Golden Cove (high performance) cores, each core has 2MB of dedicated L2 and a 1.875MB chunk of L3 cache. This means that we have a total of 120 MB of L2 cache and 112.5 MB of L3 cache spread across the cores (60 in this case). The source notes that on each die there is a memory controller tile with a 128-bit DDR5 PHY. So this interface controls 2 DDR5 channels (4 40-bit sub-channels), supporting a total of 8 DDR5 channels in the package.

At the top of each chip is the Intel Sapphire Rapids Xeon PCI-Express 5.0 interface, which is characterized by 32 lanes on each chip: 128 PCI-Express 5.0 lanes, what a barbarity! We also see that Intel is going for AI and Machine Learning with the Accelerator, consisting of the Intel Data Streaming Accelerator, the QuickAssist Technology, and DLBoost 2.0. It is definitely a chip for Big Data and cloud computing, as it has components for training deep learning neural networks. Finally, you can see 24x UPI links whose function is to connect the sockets together and are present in each of the 4 dies of the Sapphire Rapid.