The launch of HBM4 memory may be on the horizon, and though it is yet to be unveiled, estimates regarding the speeds it will achieve are already emerging. HBM (High Bandwidth Memory) has garnered significant attention in the technological industry for providing substantial bandwidth for AI accelerators.

Advancements in HBM4 Memory Technology: A Glimpse into Future Speeds

According to TrendForce estimates, HBM4 memory is projected to double its speed by 2026, thanks to its 2048-bit bus. This significant enhancement is expected to notably increase the speed of artificial intelligence and all high-performance computing tasks.

HBM4 memory is anticipated to hit the market in 2026, succeeding the current HBM3 memory. The existing memories have a width of 1,024 bits, but this number is set to double with the introduction of HBM4.

These new memories will not only increase the bandwidth but will also transition from the current 12-Hi stack to around 16-Hi layers. This transition will enable HBM memory modules to have greater capacity than before. However, this transition is expected to conclude by 2027.

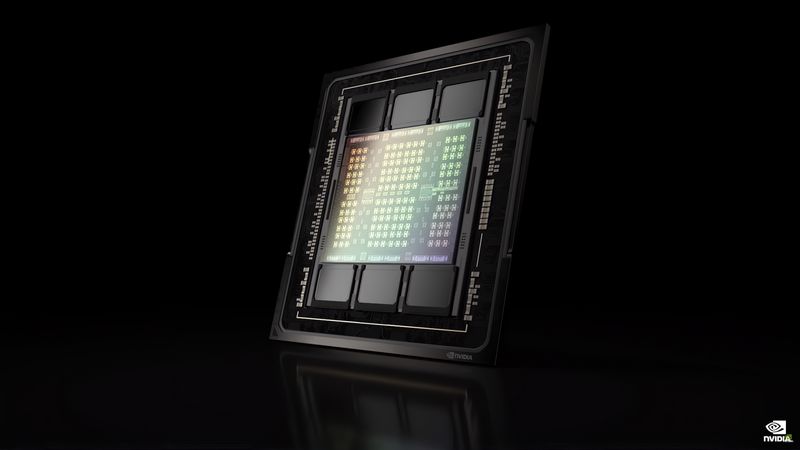

The AI segment has experienced exponential growth in recent years, necessitating new hardware to sustain this expansion. The evolution of HBM memories is crucial, as AI models benefit from memory capacity and bandwidth.

This information also indicates that HBM3 memories will have a lasting impact, spanning between 3 and 4 years, breathing life into several generations of Nvidia and AMD AI and HPC accelerator cards. We will keep you informed of all updates.